Other Topics in SLR

David Gerard

2024-02-28

Learning Objectives

Chapter 4 of KNNL (skip 4.4).

Simultaneous Inference

Measurement Error

Inverse Prediction

The following are three issues that you should be aware of. So you should be able to determine that these are issues based on a study design. But you can Google solutions if you ever come across them.

Simultaneous Inference

Motivation

Suppose we run \(m\) hypotheses, each rejecting the null when \(p < 0.05\).

If all of the null’s are true, what is the probability that we would reject at least one null?

If these hypotheses are all independent, then we have \[\begin{align} Pr(\text{at least one reject }|\text{ all null}) &= 1 - Pr(\text{no reject }|\text{ all null})\\ &=1 - 0.95^m \end{align}\]

That means that if we ran \(m = 100\) hypothesis tests, the probability that at least one would be rejected is \(1 - 0.95^{100} = 0.9941\). So we would be almost guaranteed to have a false positive (falsely rejecting the null) somewhere.

Similarly, suppose we construct 100 prediction intervals for 100 new values. What is the probability that at least one prediction interval does not cover the new observation’s value? Assuming independence, it is the same calculation \(1 - 0.95^{100} = 0.9941\).

So we are not really controlling for what we thought we were controlling.

Coming up with new measures to control, or adjust testing procedures to account for multiple tests, is called simultaneous inference.

Bonferonni Correction

One solution is to come up with adjusted \(p\)-values.

Given a family of hypothesis tests, the adjusted \(p\)-value of a test is less than \(\alpha\) if and only if the probability of at least one Type I error (among all tests) is at most \(\alpha\).

That is, if you reject when the adjusted \(p\)-value is less than \(\alpha\), then the probability (prior to sampling) of any test producing a Type I error is less than \(\alpha\).

The most basic way to obtain adjusted \(p\)-values is the Bonferonni procedure where you multiply the \(p\)-value by the number of tests.

Why does this work? Suppose we have \(m\) tests, of which \(m_0\) are actually null. The family-wise error rate of such a procedure is

\[\begin{align} &Pr(\text{Type I error among the }m_0\text{ tests})\\ &= Pr(mp_1 \leq \alpha \text{ or } mp_2\leq\alpha \text{ or } \cdots \text{ or } mp_{m_0}\leq \alpha)\\ &= Pr(p_1 \leq \alpha/m \text{ or } p_2\leq\alpha/m \text{ or } \cdots \text{ or } p_{m_0}\leq \alpha/m)\\ &\leq Pr(p_1 \leq \alpha/m) + Pr(p_2 \leq \alpha/m) + \cdots + Pr(p_{m_0} \leq \alpha/m)\\ &= \alpha/m + \alpha / m + \cdots + \alpha / m~(m_0 \text{summations})\\ &= m_0\alpha/m\\ &\leq m\alpha/m\\ &= \alpha. \end{align}\]

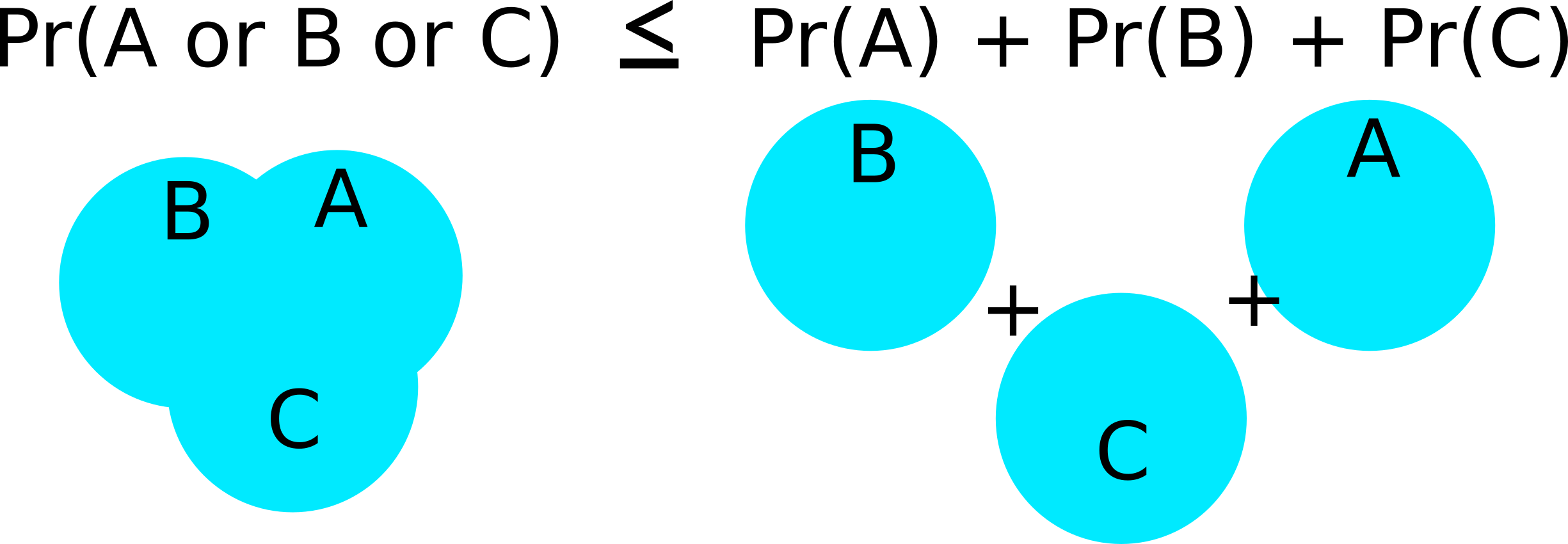

The first inequality follows from Bonferonni’s inequality

The Bonferonni confidence interval correction:

- Recall: confidence intervals of the form: estimate \(\pm\) multiplier \(\times\) standard error.

- Before, we used the multiplier

qt(1 - alpha / 2, df)most often (typicallyalpha = 0.05). - The Bonferonni procedure uses

qt(1 - alpha / (2m), df), wheremis the number of tests.

Exercise: Prove that family-wise coverage probability of the above procedure for confidence intervals is at least 0.95. (It’s easier to start with just two intervals).

There are more sophisticated methods that work better to construct simultaneous confidence intervals, or run multiple hypothesis tests. You can Google these.

In R, to obtain adjusted \(p\)-values, just use the

p.adjust()function, inserting your vector of \(p\)-values. The default is a better option than Bonferonni.p.adjust(p = c(0.1, 0.2, 0.01, 0.05))## [1] 0.20 0.20 0.04 0.15p.adjust(p = c(0.1, 0.2, 0.01, 0.05), method = "bonferroni") ## not as good## [1] 0.40 0.80 0.04 0.20When you write up an interpretation, say something like:

We have evidence against the null (Bonferonni corrected \(p\)-value of <insert \(p\)-value>).

This \(p\)-value is interpreted as a procedure that controls the family-wise error rate at a particular level (if you reject when the \(p\)-value is below that particular level).

Usual traps of \(p\)-value interpretations hold (probability of data given hypothesis, not probability of hypothesis given data, etc…).

Exercise: At a plumbing supplies company, a consultant studied the relationship between number of work units performed (

work), and the total variable labor cost in warehouses during a test period (cost). The data are presented below. Provide prediction intervals for new warehouses that perform 50, 100, and 150 work units, using a procedure with a family-wise coverage probability of at least 0.9.library(tidyverse) plumb <- tribble(~work, ~cost, 20, 114, 196, 921, 115, 560, 50, 245, 122, 575, 100, 475, 33, 138, 154, 727, 80, 375, 147, 670, 182, 828, 160, 762)

Measurement Error

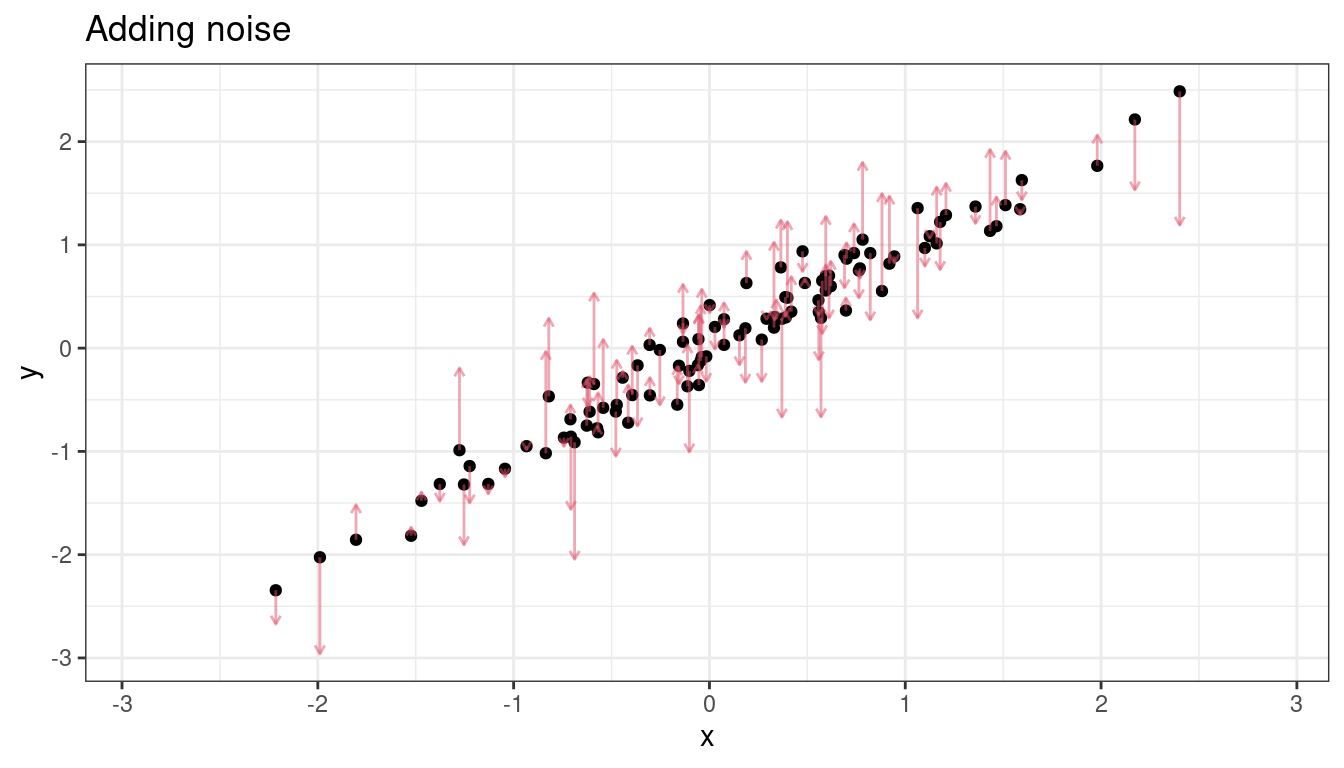

Graphical motivation

Sometimes, we are interested in the relationship between two variables, but we only observe noisy versions of those variables.

Examples:

- Suppose a researcher was interested in studying the association between corn yield (\(Y\)) and soil nitrogen level (\(X\)). There are two possible sources of errors when measuring nitrogen level. First, a small soil sample is only taken at a single location on a large plot, so this small sample is a noisy estimate of mean nitrogen level on the whole plot. Second, the chemical assay to measure nitrogen level is not 100% accurate.

- A research problem I come across often is studying the association between some biological trait (\(Y\)) and the dosage of an allele at a locus (\(X\)). These dosages are estimated from read-counts from sequencing assays, and so are not perfectly accurate.

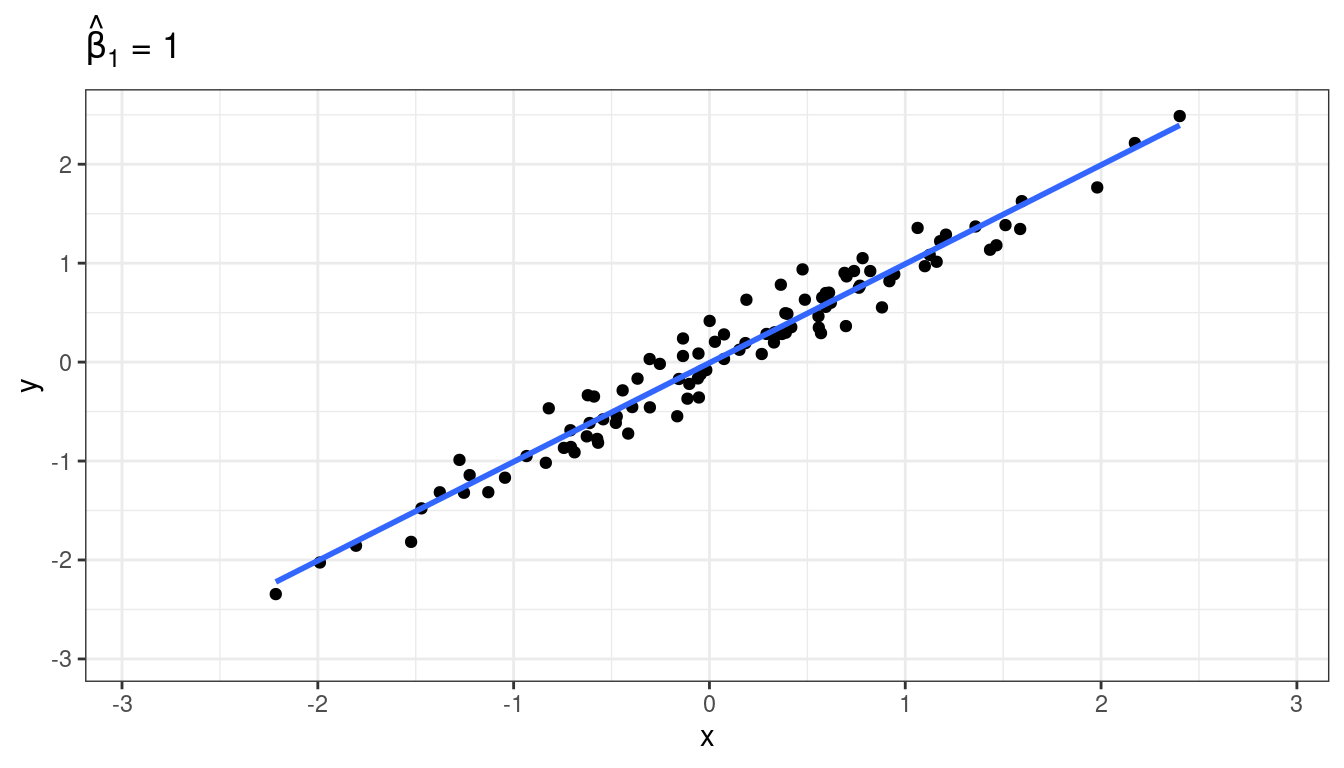

Suppose there is a strong underlying relationship between \(x\) and \(y\).

Measurement error for \(X\), attenuation

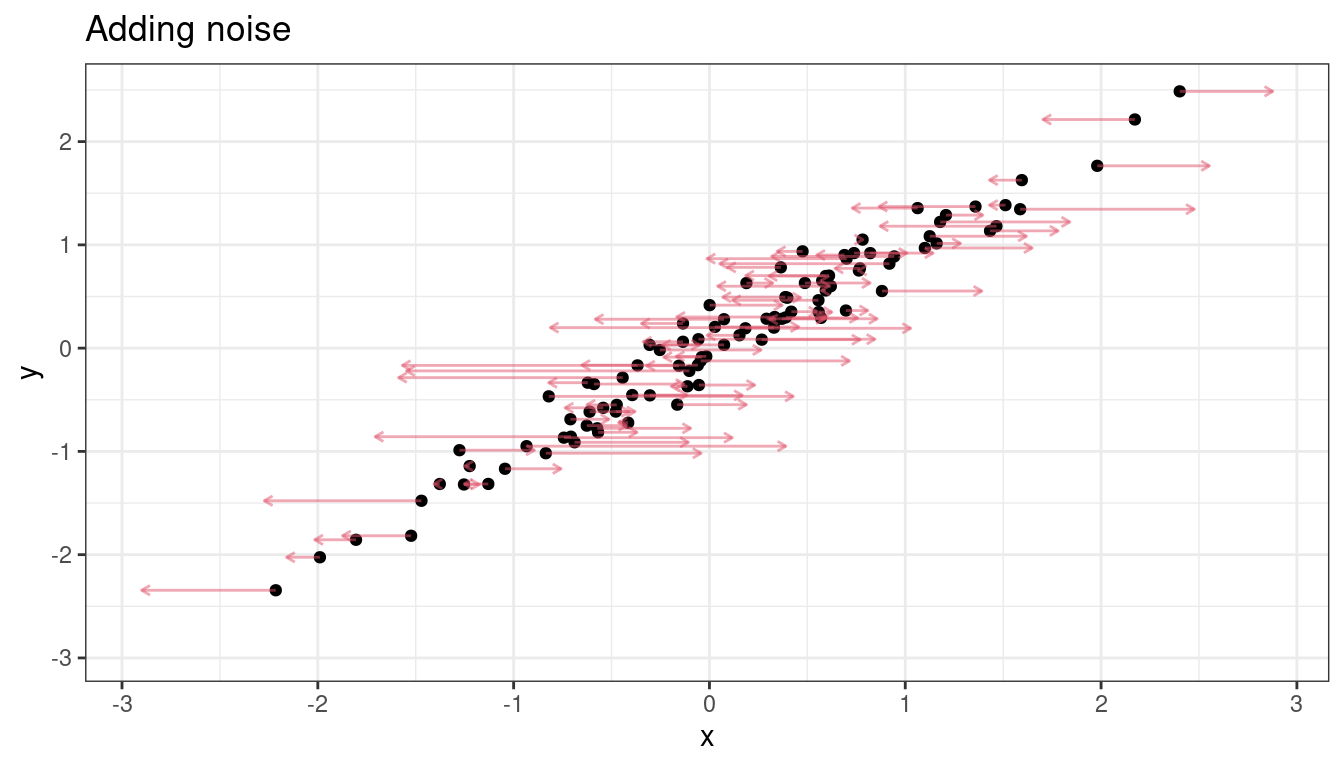

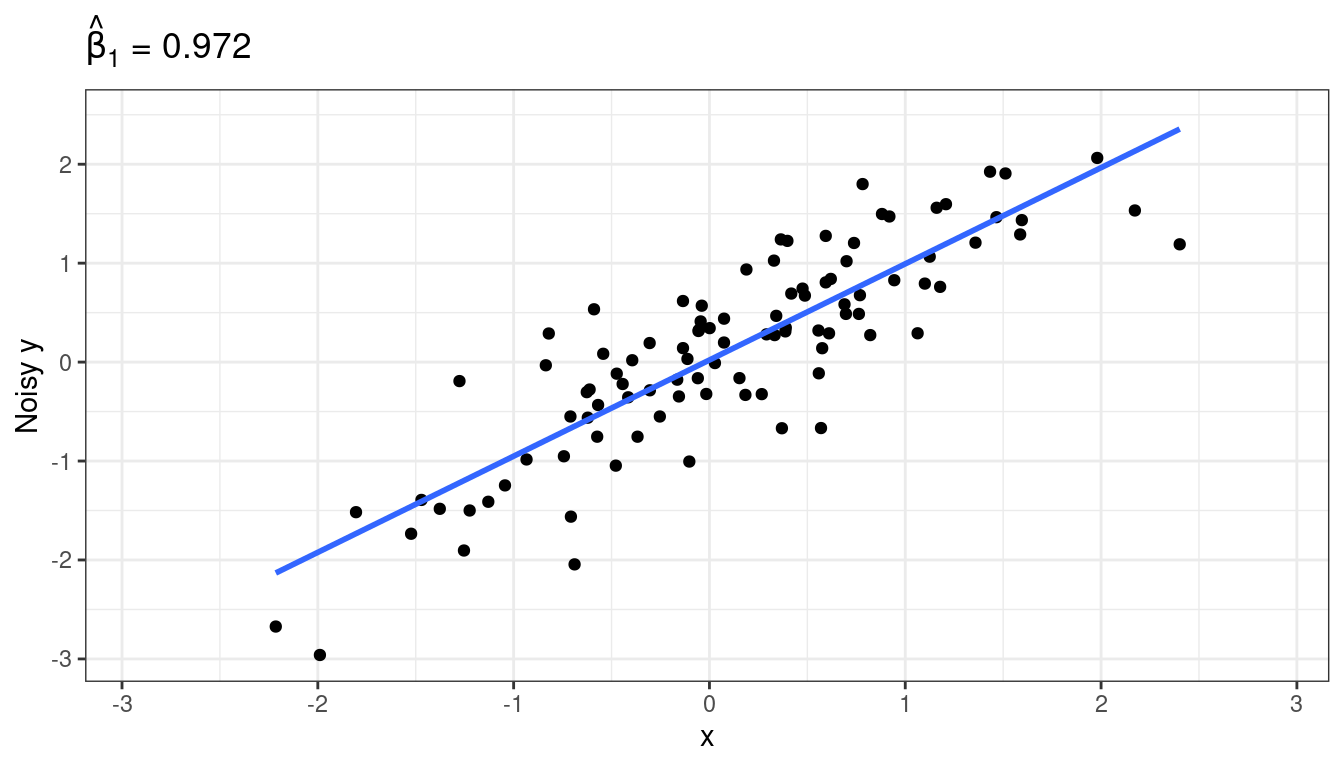

Let’s add noise in the \(x\) direction.

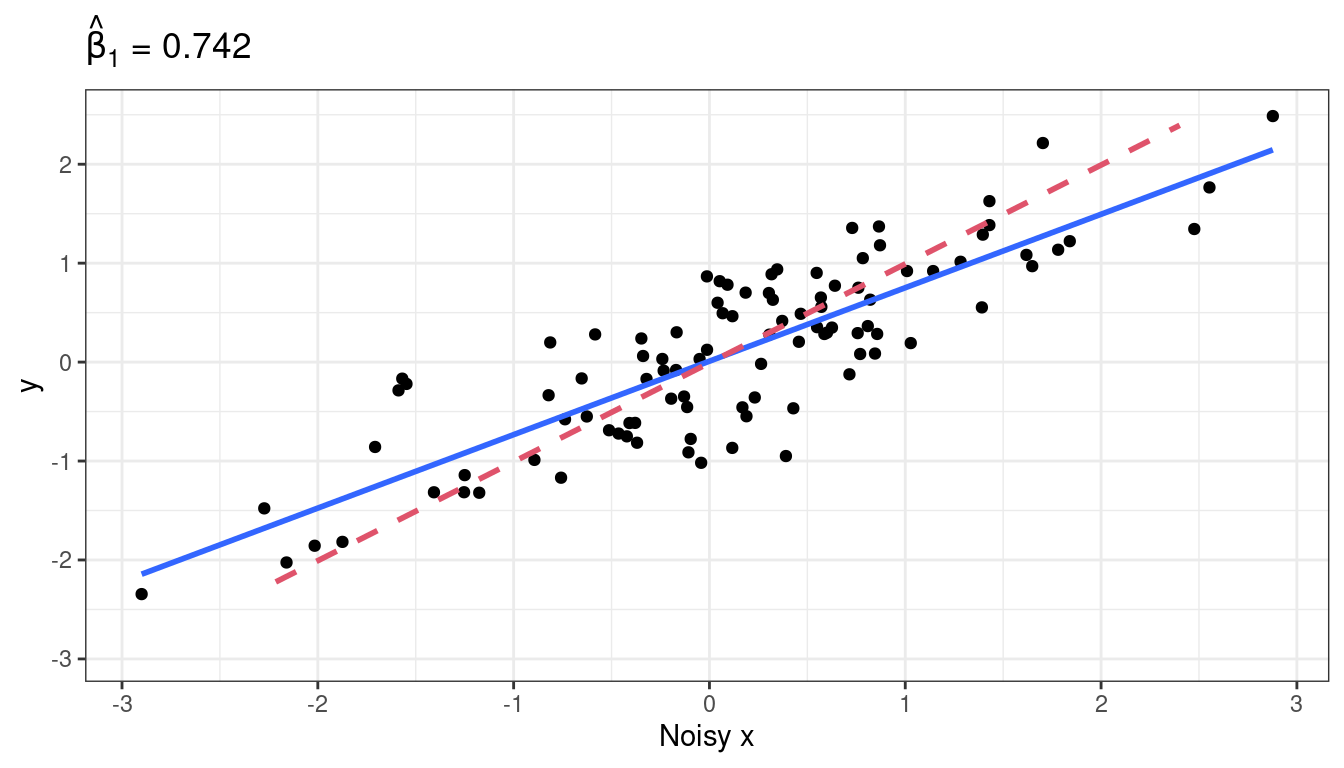

\(\hat{\beta}_1\) is now much smaller.

Why does this happen? Suppose the true underlying relationship is \[\begin{align} Y_i &= \beta_0 + \beta_1 X_i + \epsilon_i, ~\epsilon_i \overset{iid}{\sim} N(0, \sigma^2)\\ X_i^* &= X_i + \delta_i,~\delta_i \overset{iid}{\sim} N(0, \tau^2) \end{align}\]

However, we only observe \(X_i^*\), not \(X_i = X_i^* - \delta_i\). So, converting the true model in terms of \(X_i^*\), we have \[\begin{align} Y_i &= \beta_0 + \beta_1 X_i + \epsilon_i\\ &= \beta_0 + \beta_1 (X_i^* - \delta) + \epsilon_i\\ &= \beta_0 + \beta_1 X_i^* - \beta_1\delta + \epsilon_i\\ &= \beta_0 + \beta_1 X_i^* + \epsilon^*_i. \end{align}\]

Thus, if we fit the model \(E[Y_i] = \beta_0 + \beta_1 X_i^*\), then our error term is \(\epsilon^*_i = -\beta_1\delta_i + \epsilon_i\).

Since \(\epsilon^*_i\) (\(=-\beta_1\delta_i + \epsilon_i\)) and \(X^*\) (\(=X_i + \delta_i\)) both depend on the value of \(\delta_i\), they are correlated.

\[\begin{align} cov(X^*, \epsilon^*) &= cov(X_i + \delta_i, -\beta_1\delta_i + \epsilon_i)\\ &= -\beta_1 cov(\delta_i, \delta_i)\\ &= -\beta_1 var(\delta_i)\\ &= -\beta_1\tau^2. \end{align}\]

So, if \(\beta_1\) is positive, there is a negative correlation between \(X^*_i\) and \(\epsilon^*_i\). What this means is that larger values of \(X_i^*\) (larger than \(\bar{X}^*\)) tend to have more negative error terms, while smaller values of \(X_i^*\) (smaller than \(\bar{X}^*\)) tend to have more positive error terms. So this correlation smooshes the line toward horizontal.

Exercise: A researcher was setting up an experiment to test the efficacy of some antibiotic. They then randomly assigned petri dishes to obtain different concentrations (\(X\)) of this antibiotic, and then measured the density of bacteria in each petri dish. Why is this not an example of measurement error, even though the antibiotic concentrations were randomly assigned?

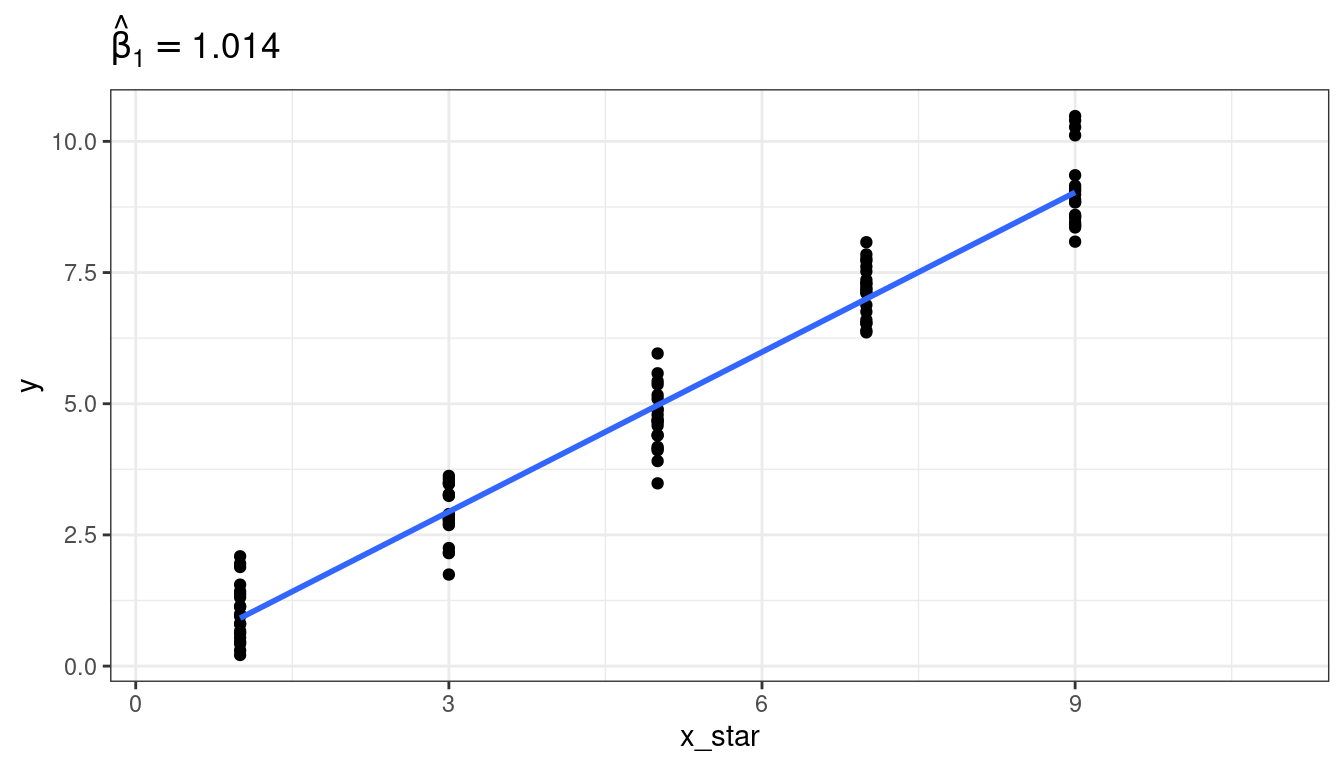

Measurement error for \(Y\), nbd

Adding noise to \(y\) changes the estimated \(\sigma^2\), not the estimated \(\beta_1\) (on average).

Mathematically, what is going on is: \[\begin{align} Y_i^* &= Y_i + \delta_i\\ Y_i &= \beta_0 + \beta_1 X_i + \epsilon_i\\ \Rightarrow Y_i^* - \delta_i &= \beta_0 + \beta_1 X_i + \epsilon_i\\ \Rightarrow Y_i^* &= \beta_0 + \beta_1 X_i + \delta_i + \epsilon_i\\ \Rightarrow Y_i^* &= \beta_0 + \beta_1 X_i + \epsilon_i^*\\ \end{align}\]

The reason why this is OK, is that \(\epsilon_i^*\) is not correlated with the observed predictor variables, unlike the previous section, so it does not smoosh the line toward horizontal.

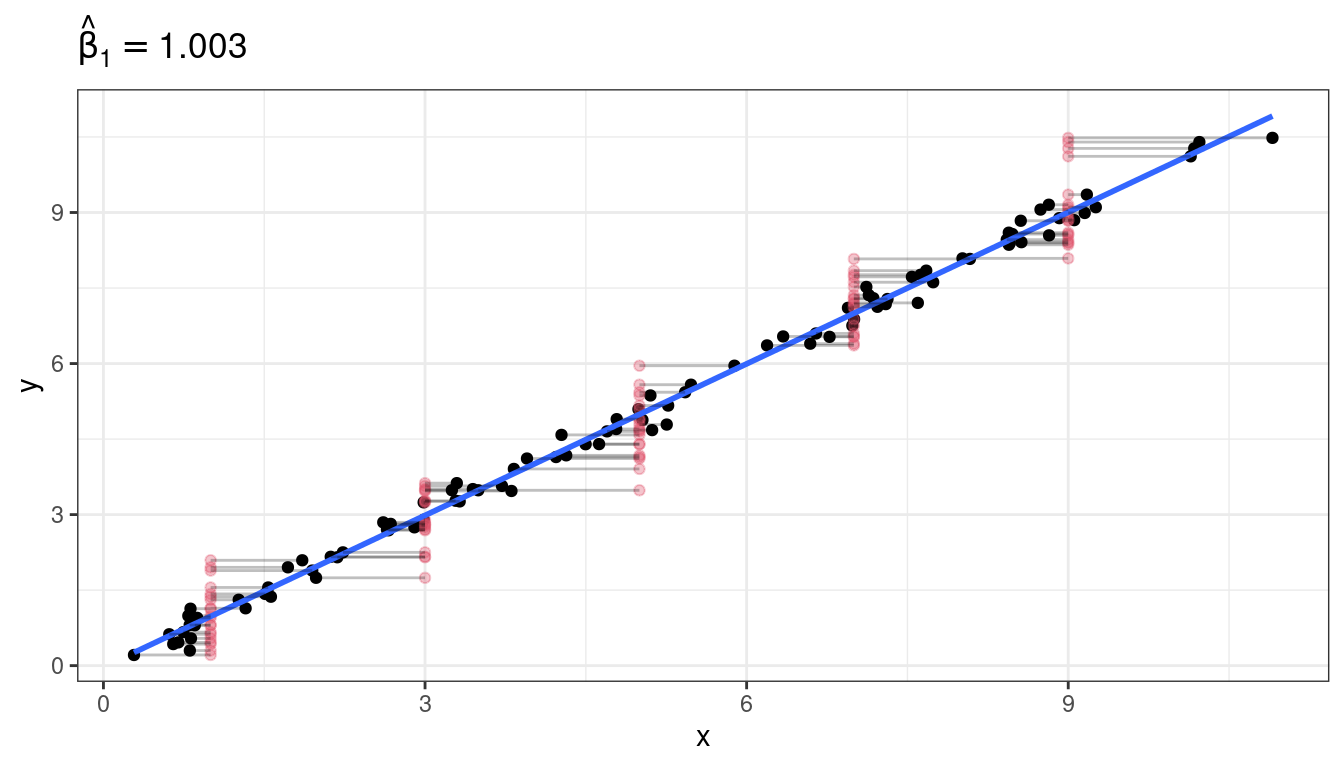

Berkson model: set \(X^*\), nbd even if \(X\) is noisy

To add even more confusion here, suppose that we precisely set \(X^*\), but the true value of \(X\) might vary from this precisely set value. Then everything turns out to be OK.

Examples:

- You set the temperature on an experiment, but the temperature might vary from your setting.

- You set the pressure according to a gauge, but the pressure might vary from this.

Previously, we had \[ X_i^* = X_i + \delta_i \] Now, we have \[ X_i = X_i^* + \delta_i \] This new situation is called a Berkson Model and does not pose issues to OLS.

Why? As before, what we have is a modified model \[\begin{align} Y_i &= \beta_0 + \beta_1 X_i^* + \epsilon_i^*. \end{align}\] However, because the \(X_i^*\)’s were fixed by us, they are constants and so must be uncorrelated with the \(\epsilon_i^*\)’s.

Graphical demonstration

Exercise: Each of the following describes a possible predictor. State whether using that predictor would be an example of a Berkson model versus a “classical error” model.

You want to create the world’s best brownies. So you are doing an experiment on how much sugar to add. You use a scale to measure out known pre-set weights of sugar. But you know that your scale is old and not too accurate.

For each batch of brownies, you extract a small crumb at a randomly located position in the batch and send it in for pH analysis. The pH for the sample is accurate, but you worry that you are not getting the average pH of the brownie due to heterogeneous pH throughout the brownie.

You choose to vary the temperature of your oven for each batch, but you know your oven is a little fidgety.

You measure the density of the resulting brownies by pushing each with your finger and rating the density on a scale of 1 to 10.

You set up a panel to taste test your brownies. To control for brownie savviness, one question asks how frequently the individual eats brownies, but you worry about memory issues.

NOTE: What gets you into trouble is when \(X_i^*\) is caused by \(X_i\). When \(X_i\) is caused by \(X_i^*\), then we have a Berkson model and no issues. But having \(X_i\) be caused by \(X_i^*\) does not necessarily imply that we are doing an experiment.

One example that is Berkson but not a controlled experiment:

- You are interested in studying the effects of dust exposure on lung disease. You have a group of miners from different mines, but you measure dust within their mines, not the actual level of dust the miners were exposed to. This is Berkson because the dust in the mine causes the dust exposure in the miner, so the proper model is \(X_i = X_i^* + \delta_i\).

Measurement error conclusions:

- To conclude:

- If your measurements of \(y\) are noisy, this is not a problem since that noise gets picked up by the \(\epsilon_i\)’s.

- If your measurements of \(x\) are noisy and you did not precisely set them, then your regression coefficient estimate of the slope will be “attenuated” toward zero.

- If your measurements of \(x\) are noisy, but you did precisely set them, then you can proceed with OLS as usual.

Final comments

Measurement error does not effect hypothesis testing as long as you are testing \(H_0: \beta_1 = 0\). If you are testing for a different value of \(\beta_1\), then it will bias your \(p\)-values low.

- Why? If \(\beta_1\) actually is 0, then measurement error cannot attenuate estimates of \(\beta_1\) closer to 0, because they are already unbiased at 0.

So measurement error is important for estimation, not hypothesis testing (usually).

If the measurement error is really small relative to the scale of the \(X\) variable, you don’t have to worry about this bias so much.

- “small” means \(\frac{\tau^2}{var(X) + \tau^2} < 0.05\) (ratio of measurement error variance to variance of the true \(X_i\)’s plus the measurement error variance).

This section assumed that the measurement error had mean 0 (so negative and positive error cancel out). You run into much worse trouble if the measurement error is biased in one direction or the other.

You fix the effects of measurement error by having prior knowledge on the measurement error variance (\(\tau^2\)), typically by having repeat observations at a given \(X_i\) (e.g. \(X_{i1}^* = X_i + \delta_{i1}\), \(X_{i2}^* = X_{i} + \delta_{i2}\), \(\ldots\)). See Carroll et al. (2006) for details.

Inverse Prediction

Sometimes you do a regression of \(Y\) on \(X\), but want to predict the value of \(X\) that results in a given value of \(Y\). This is called inverse prediction or, sometimes, calibration.

- i.e. predict \(X\) from \(Y\).

Examples:

- You have a regression model for how much a drug (\(X\)) will decrease cholesterol (\(Y\)). You have a new patient that you want to reduce cholesterol by a certain amount (\(Y_{i(new)}\)). How much drug should you give them (\(X_{i(new))}\)?

- You have a model for pH of a steer carcass over time. A carcass pH should be about 6 (\(Y_{i(new)}\)) before freezing. How long should you wait (\(X_{i(new)}\))?

This second example comes from the Sleuth 3 package.

library(Sleuth3) data("case0702") steer <- case0702 glimpse(steer)## Rows: 10 ## Columns: 2 ## $ Time <int> 1, 1, 2, 2, 4, 4, 6, 6, 8, 8 ## $ pH <dbl> 7.02, 6.93, 6.42, 6.51, 6.07, 5.99, 5.59, 5.80, 5.51, 5.36We still have the model \[ Y_i = \beta_0 + \beta_1 X_i + \epsilon_i \]

If we have the estimated regression line \[ y = \hat{\beta}_0 + \hat{\beta_1}x \]

Then the predicted value of \(X_{i(new)}\) is \[ \hat{X}_{i(new)} = \frac{Y_{i(new)} - \hat{\beta}_0}{\hat{\beta}_1} \]

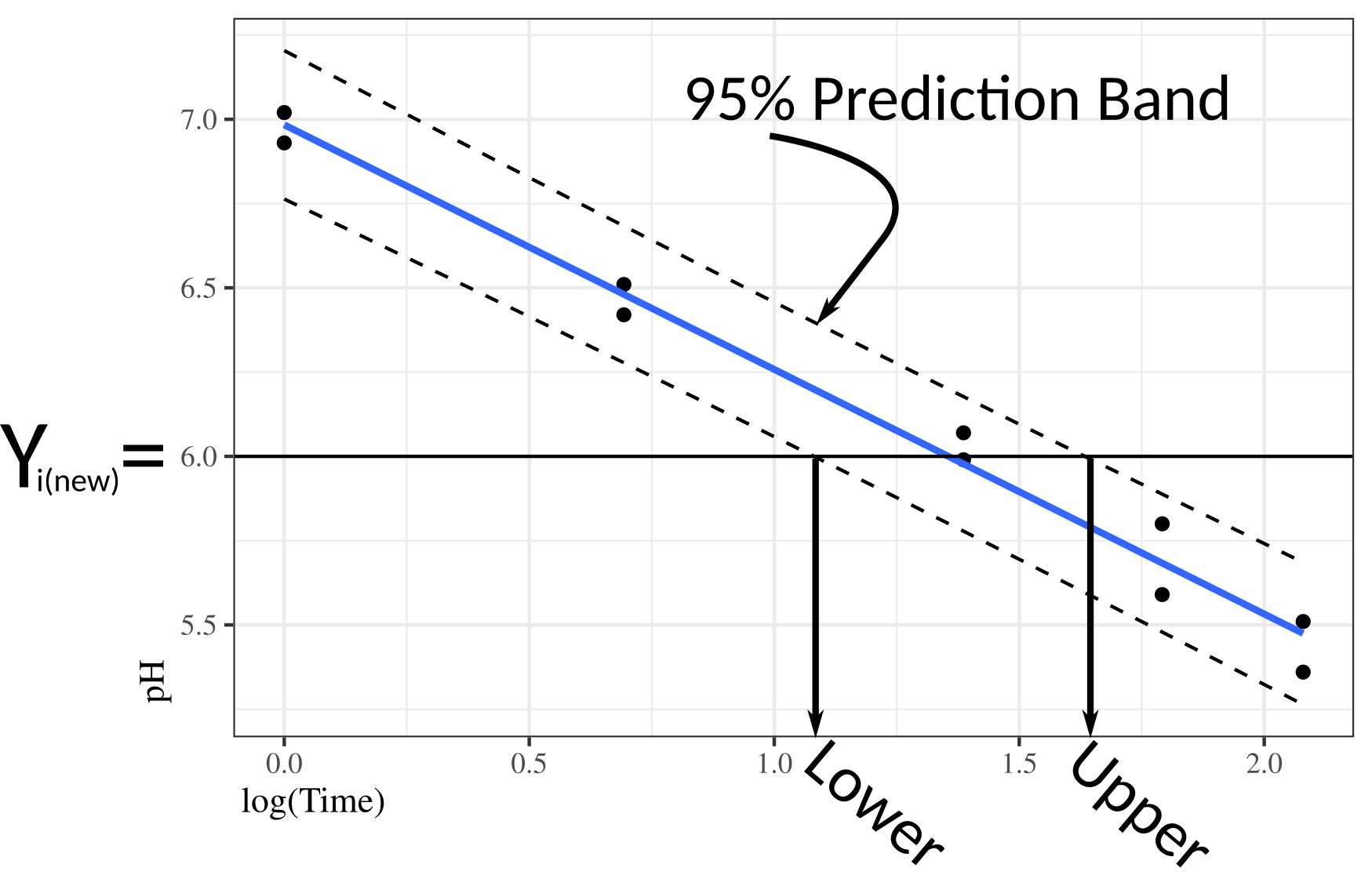

To obtain a 95% prediction interval, you plot the 95% prediction bands, draw a horizontal line at the desired \(Y_{i(new)}\), then find the values of \(X\) that cross those prediction bands.

You interpret the prediction interval still in terms of variability in \(Y\) (not \(X\)). We basically find a region of \(X\) values where \(Y_{i(new)}\) would be included in the prediction interval. \(X\) values outside of this region would not have \(Y_{i(new)}\) in the prediction interval.

Why not just regress \(X\) on \(Y\)?

- \(Y\) is noisy and \(X\) is precise (see measurement error section above).

- We believe that \(X\) might cause \(Y\) (should “time” ever be a response variable for anything?)

Use the the

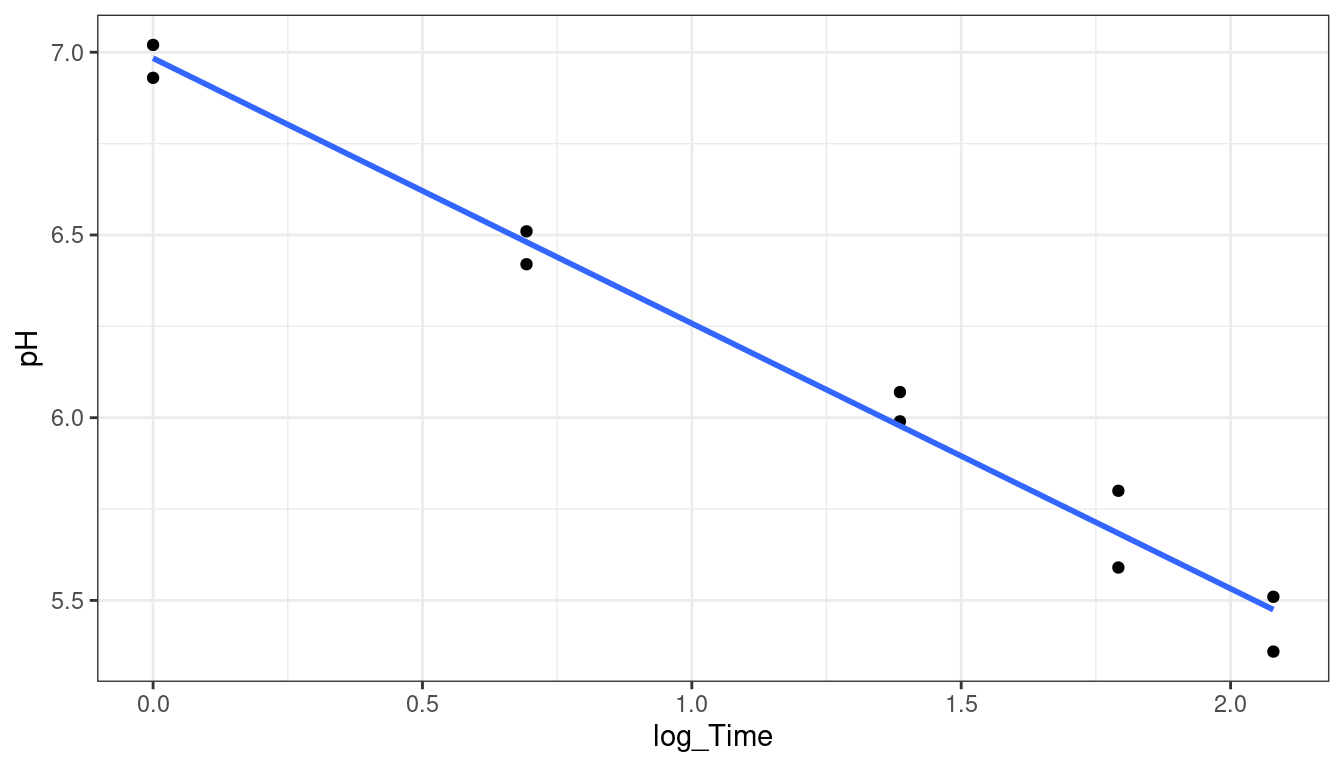

calibrate()function from the{investr}to do inverse regression in R. (I logged theTimevariable to correct for issues in the fit of the linear model).library(investr) steer <- mutate(steer, log_Time = log(Time)) ## always plot data first ggplot(data = steer, mapping = aes(x = log_Time, y = pH)) + geom_point() + geom_smooth(method = "lm", se = FALSE)

lmout <- lm(pH ~ log_Time, data = steer) cout <- calibrate(object = lmout, y0 = 6) cout## estimate lower upper ## 1.355 1.081 1.634These are the predictions of

log(Time). To predictTime, we just exponentiate these values.exp(cout$estimate)## [1] 3.879exp(cout$lower)## [1] 2.948exp(cout$upper)## [1] 5.124So we predict that it takes anywhere from 2.9 to 5.1 hours to reach a pH of 6.

Exercise: the

crystaldata from the{investr}package measures the time (time) it takes to grow crystals to a final weight (weight) in grams. You can load it in R viadata("crystal") glimpse(crystal)## Rows: 14 ## Columns: 2 ## $ time <dbl> 2, 4, 6, 8, 10, 12, 14, 16, 18, 20, 22, 24, 26, 28 ## $ weight <dbl> 0.08, 1.12, 4.43, 4.98, 4.92, 7.18, 5.57, 8.40, 8.81, 10.81, 11…How long does it take a crystal to reach 10 g?